- Home

- Services

- Secure Digitalisation

- AI Assurance and safeAI

- Home

- Services

- Secure Digitalisation

- AI Assurance and safeAI

Trust in Artificial Intelligence

AI Assurance | safeAI

Overall, AI assurance is the key factor for the successful and responsible deployment of artificial intelligence.

It encompasses all measures aimed at ensuring the safety, reliability, and trustworthiness of AI systems throughout their entire lifecycle.

As a partner for safety-critical applications, we support you with holistic approaches and proven methodologies – from concept and design through to testing, validation, and operation.

With safeAI, we have specifically developed a dedicated framework to reliably safeguard artificial intelligence in safety-critical domains. By combining rigorous verification methods, system-level analyses, and organisational measures, we provide a pragmatic and scalable solution that embeds trust and reliability at its core. In doing so, we enable the highest safety and quality standards – whether in autonomous driving, defence, or industrial automation.

Our Contribution to the Trustworthiness and Reliability of your AI

We support companies and organisations throughout the entire development and operational lifecycle of AI systems. With a holistic perspective, we identify risks at an early stage and establish the foundation for reliable, trustworthy, and successful AI applications.

✅ Comprehensive safety and trust frameworks

We address all relevant aspects of your AI systems – from architecture and algorithms to organisational processes – and develop solutions that systematically embed safety and trustworthiness.

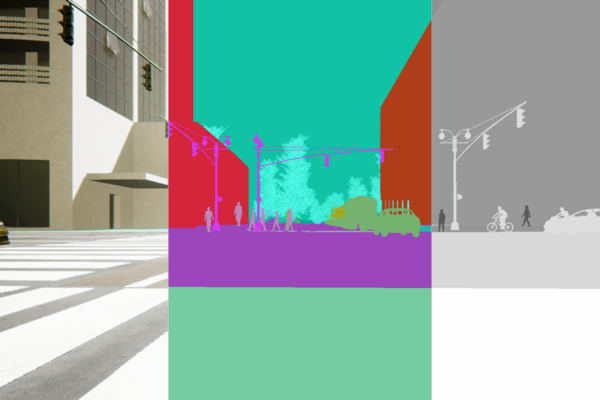

✅ Advanced testing and validation environments

With state-of-the-art testing methods and simulation environments, we evaluate the performance and stability of your AI solutions – before they enter productive operation.

✅ Statistically grounded safety measures

We apply statistical methods to identify and mitigate potential risks. In this way, we effectively protect your AI systems against threats and disruptions.

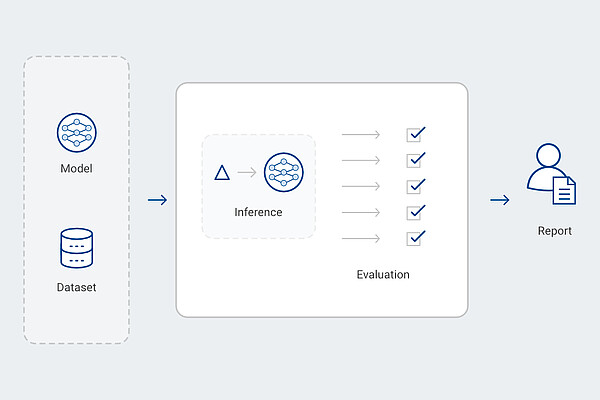

✅Technical assurance for trustworthy AI

We provide tool-supported evaluation and analysis of AI models and their underlying datasets across five central dimensions: data analysis, performance, robustness, uncertainty, and explainability. Our assessment approach creates a deep understanding of model behaviour and capabilities. The resulting evaluation report identifies strengths, potential risks, and limitations – forming the basis for effective safety measures, regulatory compliance, and trustworthy AI applications.

With our extensive experience in safeguarding highly complex systems, we work together with our partners to shape a trustworthy, AI-enabled future.

safeAI@IABG

We combine the latest insights from AI research, standardisation, and regulation with IABG’s longstanding expertise in testing, analysis, and certification processes. Through our active participation in national, European, and international AI standardisation bodies, we contribute to the development of new standards and guidelines that enhance the value of AI systems. With the initiation of DIN SPEC 92005, "AI quantification of uncertainties in machine learning", we have made a fundamental contribution in this field.

How can we help you?

Please fill in the form and we will get in touch with you as soon as possible.